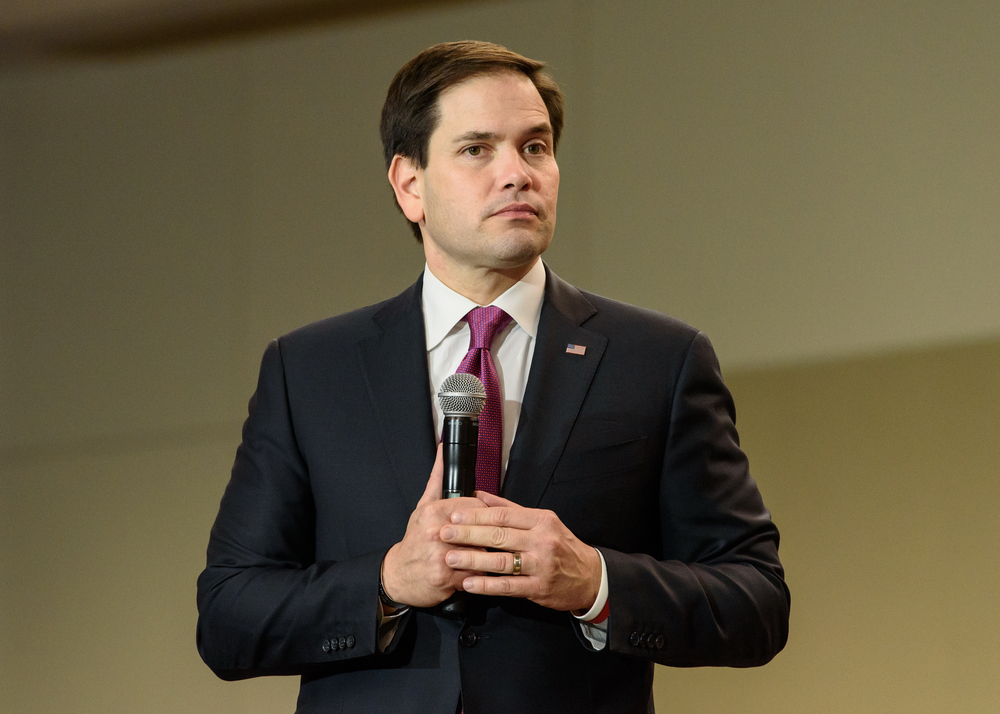

If someone called you claiming to be a government official, would you know if their voice was real? This question became frighteningly relevant this week when a cybercriminal used social engineering and AI to impersonate Secretary of State Marco Rubio, fooling high-level officials with fake voice messages that sounded exactly like him. It raises a critical concern: would other world leaders be able to tell the difference, or would they fall for it too?

The Rubio Incident: A Wake-Up Call

In June 2025, an unknown attacker created a fake Signal account using the display name “Marco.Rubio@state.gov” and began contacting government officials with AI-generated voice messages that perfectly mimicked the Secretary of State’s voice and writing style. The imposter successfully reached at least five high-profile targets, including three foreign ministers, a U.S. governor, and a member of Congress.

The attack wasn’t just about pranks or publicity. U.S. authorities believe the culprit was “attempting to manipulate powerful government officials with the goal of gaining access to information or accounts.” This represents a sophisticated social engineering attack that could have serious national and international security implications.

Why Voice Scams Are Exploding

The Rubio incident isn’t isolated. In May, someone breached the phone of White House Chief of Staff Susie Wiles and began placing calls and messages to senators, governors and business executives while pretending to be Wiles. These attacks are becoming more common because:

- AI voice cloning is now accessible to everyone: What once required Hollywood-level resources can now be done with free online tools

- Social media provides voice samples: Just a few seconds of someone’s voice from a video or podcast is enough

- People trust familiar voices: We’re psychologically wired to trust voices we recognize

- High-value targets are everywhere: From government officials to your own family members

It’s Not Just Politicians – Nobody is Immune

While the Rubio case involved government officials, these same techniques are being used against everyday Americans. A recent McAfee study found that 59% of Americans say they or someone they know has fallen for an online scam in the last 12 months, with scam victims losing an average of $1,471. In 2024, our research revealed that 1 in 3 people believe they have experienced some kind of AI voice scam

Some of the most devastating are “grandparent scams” where criminals clone a grandchild’s voice to trick elderly relatives into sending money for fake emergencies. Deepfake scam victims have reported losses ranging from $250 to over half a million dollars.

Common AI voice scam scenarios:

- Family emergency calls: “Grandma, I’m in jail and need bail money”

- CEO fraud: Fake executives asking employees to transfer money

- Investment scams: Celebrities appearing to endorse get-rich-quick schemes

- Romance scams: Building fake relationships using stolen voices

From Mission Impossible to Mission Impersonated

One big reason deepfake scams are exploding? The tools are cheap, powerful, and incredibly easy to use. McAfee Labs tested 17 deepfake generators and found many are available online for free or with low-cost trials. Some are marketed as “entertainment” — made for prank calls or spoofing celebrity voices on apps like WhatsApp. But others are clearly built with scams in mind, offering realistic impersonations with just a few clicks.

Not long ago, creating a convincing deepfake took experts days or even weeks. Now? It can cost less than a latte and take less time to make than it takes to drink one. Simple drag-and-drop interfaces mean anyone — even with zero technical skills – can clone voices or faces.

Even more concerning: open-source libraries provide free tutorials and pre-trained models, helping scammers skip the hard parts entirely. While some of the more advanced tools require a powerful computer and graphics card, a decent setup costs under $1,000, a tiny price tag when you consider the payoff.

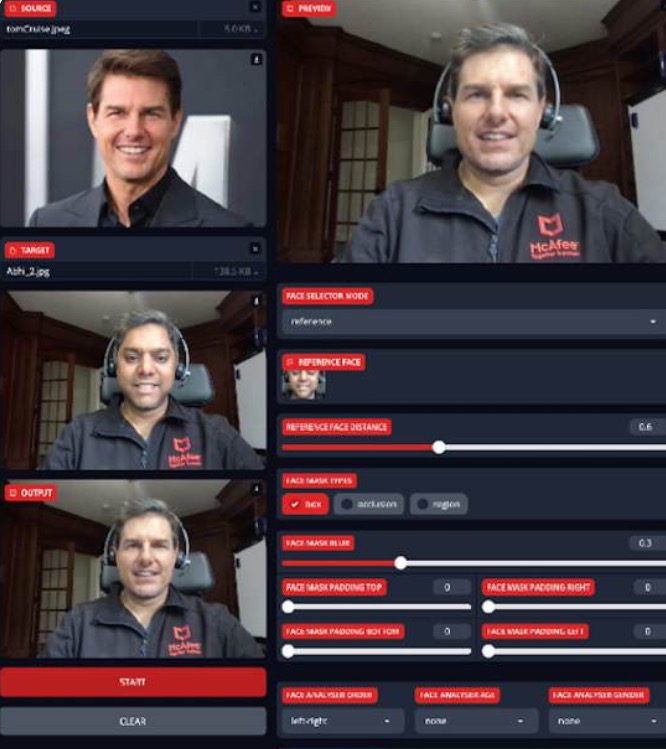

Globally, 87% of scam victims lose money, and 1 in 5 lose over $1,000. Just a handful of successful scams can easily pay for a scammer’s gear and then some. In one McAfee test, for just $5 and 10 minutes of setup time, we created a real-time avatar that made us look and sound like Tom Cruise. Yes, it’s that easy — and that dangerous.

Figure 1. Demonstrating the creation of a highly convincing deepfake

Fighting Back: How McAfee’s Deepfake Detector Works

Recognizing the urgent need for protection, McAfee developed Deepfake Detector to fight AI-powered scams. McAfee’s Deepfake Detector represents one of the most advanced consumer tools available today.

Key Features That Protect You

- Near-Instant Detection: McAfee Deepfake Detector uses advanced AI to alert you within seconds if a video has AI-generated audio, helping you quickly identify real vs. fake content in your browser.

- Privacy-First Design: The entire identification process occurs directly on your PC, maximizing on-device processing to keep private user data off the cloud. McAfee does not collect or record a user’s audio in any way.

- Advanced AI Technology: McAfee’s AI detection models leverage transformer-based Deep Neural Network (DNN) models with a 96% accuracy rate.

- Seamless Integration: Deepfake Detector spots deepfakes for you right in your browser, without any extra clicks.

How It Would Have Helped in the Rubio Case

While McAfee’s Deepfake Detector is built to identify manipulated audio within videos, it points to the kind of technology that’s becoming essential in situations like this. If the impersonation attempt had taken the form of a video message posted or shared online, Deepfake Detector could have:

- Analyzed the video’s audio within seconds

- Flagged signs of AI-generated voice content

- Alerted the viewer that the message might be synthetic

- Helped prevent confusion or harm by prompting extra scrutiny

Our technology uses advanced AI detection techniques — including transformer-based deep neural networks — to help consumers discern what’s real from what’s fake in today’s era of AI-driven deception.

While the consumer-facing version of our technology doesn’t currently scan audio-only content like phone calls or voice messages, the Rubio case shows why AI detection tools like ours are more critical than ever — especially as threats evolve across video, audio, and beyond – and why it’s crucial for the cybersecurity industry to continue evolving at the speed of AI.

How To Protect Yourself: Practical Steps

While technology like McAfee’s Deepfake Detector provides powerful protection, you should also:

- Be Skeptical of “Urgent Requests”

- Trust and verify identity through alternative channels

- Ask questions only the real person would know, using secret phrases or safe words

- Be wary of requests for money or sensitive information

- Pause if the message stirs strong emotion — fear, panic, urgency — and ask yourself, would this person really say that

The Future of Voice Security

The Rubio incident shows that no one is immune to AI voice scams. It also demonstrates why proactive detection technology is becoming essential. Knowledge is power, and this has never been truer than in today’s AI-driven world.

The race between AI-powered scams and AI-powered protection is intensifying. By staying informed, using advanced detection tools, and maintaining healthy skepticism, we can stay one step ahead of cybercriminals who are trying to literally steal our voices, and our trust.